Lower Network Threat Detection cost with Computational Storage

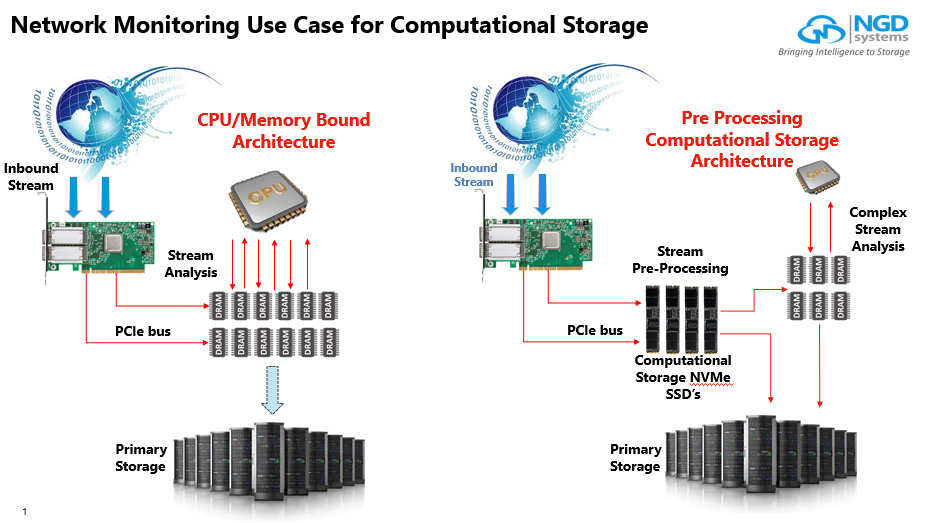

Network Threat Detection Systems require huge amounts of DRAM and CPU to analyse streams. By having Computational NVMe SSD’s pre-processing the streams, the clean streams can bypass main CPU and DRAM and directly moved to primary storage as where only the dirty streams are moved to DRAM and CPU for deeper inspections. As shown on the left hand side the classical CPU/DRAM bound architecture and on the right side the picture that illustrates Computational NVMe SSD’s taking over pre processing compute tasks.

The clear benefits are:

- Infinitely scalable analysis buffer using lower cost NVMe SSD’s (as opposed to more expensive DRAM)

- In-Situ processing allows for incoming stream to be pre-processed and either sent directly to primary storage (if clean) or sent into main memory for further analysis (dirty)

- Architecture allows for system cost reductions by reducing the amount of DRAM needed for analysis buffer and reducing x86 CPU cycles required for analysis which can reduce core count (most of these boxes use tens of cores so a lot of money on the table here)

Computational Storage is a concept that has the power to deliver huge business benefit of faster results at lower cost per result. By having a fully functional Quad core 64 bit ARM processor on each of the NVMe SSD’s in the server, the SSD’s are not only used to store large amounts of data but can process and analyze data right at the location where the data was stored in the first place. Main CPU, GPU and DRAM are only being used for very high demanding compute tasks as where the secondary functions like search, indexing, pre analytics, encryption etc are processed inside the NVMe SSD. All the SDD’s work together as compute nodes in a distributed compute cluster inside the server chassis.

With Computational Storage overall compute performance and storage capacity per node increases significantly while it requires less equipment, less IO, less power and less floor space.

The result: faster results at lower processing cost.

About the author