Immersed Cooled Storage Now available

Immersion cooling solutions for software-defined storage solutions enable to process data on every place in the world where it is created without the need of a datacenter.

We are convinced that immersion cooling is the future. During cooling with liquids, the heat is no longer cooled by (directed) air flows, but by a liquid. The capacity of the heat exchange is greater than with air cooling. And there is virtually no loss due to ineffective cooling by the unnecessary cooling of empty server racks and blank plates, as is the case with air cooling.

New Partnership with RNT Rausch

We are proud to announce that our new partner RNT Rausch joined us in our journey to build on NVME capabilities to offer customers solutions that are simple, scalable, cost-efficient, and integrate with any orchestration system on any server or any cloud. #NVMEoverTCPIP #S3onNVME #Storageonsteroids

RNT Rausch is a Germany based technology pioneer with 20+ years of experience in the high tech server and storage industry. Their mission is to always be ahead of technology trends and rethink future-proof server and storage solution designs that go hybrid and tackle business challenges and makes SMBs, enterprises, data centres and service providers around the world fit for tomorrow’s technical revolution because our brain’s always on. RNT is making IT possible.

Why CDN Like Computational Storage

When most people think of content delivery networks (CDNs), they think about streaming huge amounts of content to millions of users, with companies like Akamai, Netflix, and Amazon Prime coming to mind. What most people don’t think about in the context of CDNs is computational storage – why would these guys need a technology as “exotic” as in-situ processing? Sure, they have a lot of content – Netflix has nearly 7K titles in its library, while Amazon Prime has almost 20K titles; but at 5GB per title, that is only 35TB for Netflix, and 100TB for Amazon Prime. These aren’t the petabyte sizes that one typically thinks of when discussing computational storage.

So why would computational storage be important to CDNs? Two phrases summarize it all – encryption/Digital Rights Management (DRM), and locality of service. For CDNs that serve up paid content, the user’s ability to access the content must be verified (this is the DRM part), and then the content must be encrypted with a key that is unique to that user’s equipment (computer, tablet, smartphone, set-top box, etc.). When combined with the need to position points of presence (PoPs) in multiple global location, the cost of this infrastructure (if based on standard servers) can be significant.

Computational storage helps to significantly reduce these costs in a couple of ways. Our ability to search subscriber databases while on the SSD eliminates the need for expensive database servers, significantly reducing the PoP footprint. Our ability to encrypt content on our computational storage devices also eliminates the servers that typically perform this task. When you consider that six of our 16TB U.2 SSDs could hold the entire Netflix library (with three SSDs for redundancy), you can see how this technology could be important to CDNs. Want more information on how computational storage can help the content delivery network industry, just contact us at nvmestorage.com.

Amsterdam is blocking new datacenters.

Too much energy consumption and space allocation! What can we do?

Datacenter capacity in and around Amsterdam has grown with 20% in 2018 according Dutch Data Center Association (DDA). Bypassing rival data center locations such as London, Paris and Frankfurt. But that growth comes with a problem. They claim too much space and consume too much energy. Therefor the Amsterdam Counsel have decided to invoke a temporary building stop for new data centers until new policy is in place to regulate growth.

At current, local governments do not have control on new initiatives and energy suppliers have a legal obligation to provide power. The problem here is that the growth of datacenter capacities is contra productive to the climate ambitions of governments which are adopted in legislation. Therefor regulation enforcing sustainable growth with green energy and delivery of residual heat back to consumer households will be mandated and in line with climate ambitions.

For now, the building stop is for 1 year. The question is what can we do in between, or are we going to sit and wait and do nothing?

If you cannot grow in performance and capacity outside the current floor space, the most sensible thing to do is to reclaim space within the existing datacenters. There are a few practical changes possible that have minor impact on operations but a huge impact on density and efficient usage of currently available floor space.

- Usage of lower power, larger capacity NVMeSSD’s instead of spinning disk’s and high performance energy slurping 1st generation NVMe SSD. Already available are 32TB standard NVMe SSD at less than 12w power. Equipping a 24 slot 2 u server delivers 768TB of storage capacity in just 2U Rackspace at less than ½ watt/TB. NGD Systems is the front runner of delivering these largest capacities NVMe SSD’s at the lowest power consumption rates. No changes required, just install and benefit from low power and large capacities.

- Reduce the number of servers, Cpu, RAM and reduce movement of data by processing secondary compute tasks, like inference, encryption, authentication, compression on the NVMe SSD itself. This is called Computational Storage by NGD Systems. Simply explained. Install an ARM quad core CPU on every NVME SSD and standard Linux applications can run directly off the drive. A 24 slot 2U server can host 96 additional Linux cores that augment the existing server, creating an enormously efficient compute platform at very low power, replacing many unbalanced X86 servers. Change required. Look at the application landscape and determine what applications are using too much resources and migrate them off, one by one.

- Disaggregate storage from cpu. There is huge inefficiency in server farms. Lots of Idle time of CPU’s and unbalanced storage to cpu ratio’s. Eliminating this unbalance is relatively simple and increases storage/cpu utilization and efficiency. Application servers mount their exact required storage volumes from a networked storage server over the already existing network infrastructure at the same low latencies as if the NVMe SSD was inside the server chassis. The people at Lightbits Labs have made it their mission to tackle the problem of storage inefficiencies in the datacenter. Run POC’s to determine where the improvements are.

- The most simple method to reclaim space is to throw away what you are not usinganymore or move it outside to where the rent is cheaper and space widely available. If you know what data you have and what the value of that data is actions to save, move or delete that data can be put into policies and automated. Komprise has the perfect toolset to analyze, qualify and move data to where it sits best, including to the waste bin. Run a simple pilot and check the cost savings.

What happens in Amsterdam area today is something we will start to see happening more and more and will kick off many more initiatives in other areas to regulate data center growth and bring that in line with our climate ambitions. If we start banning polluting diesel cars from our inner cities and tax them why could we not have the same discussion on the usage of power slurping old metal boxes with SATA spinning rust within data centers? I am pretty sure that regulators will encourage good behavior with permits and discourage bad behavior with taxes in the not too distant future.

Maybe it is time to start thinking about Watts/TB in stead of $/Gb

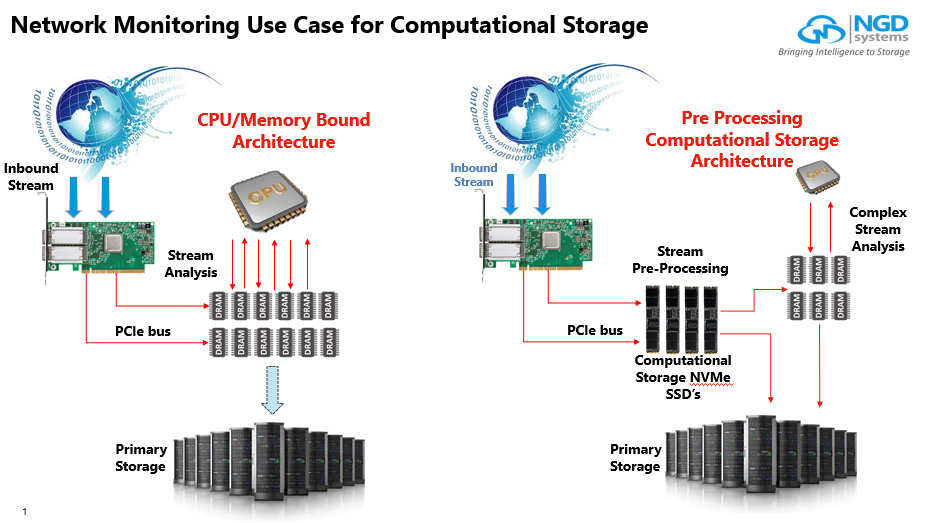

Lower Network Threat Detection cost with Computational Storage

Network Threat Detection Systems require huge amounts of DRAM and CPU to analyse streams. By having Computational NVMe SSD’s pre-processing the streams, the clean streams can bypass main CPU and DRAM and directly moved to primary storage as where only the dirty streams are moved to DRAM and CPU for deeper inspections. As shown on the left hand side the classical CPU/DRAM bound architecture and on the right side the picture that illustrates Computational NVMe SSD’s taking over pre processing compute tasks.

The clear benefits are:

- Infinitely scalable analysis buffer using lower cost NVMe SSD’s (as opposed to more expensive DRAM)

- In-Situ processing allows for incoming stream to be pre-processed and either sent directly to primary storage (if clean) or sent into main memory for further analysis (dirty)

- Architecture allows for system cost reductions by reducing the amount of DRAM needed for analysis buffer and reducing x86 CPU cycles required for analysis which can reduce core count (most of these boxes use tens of cores so a lot of money on the table here)

Computational Storage is a concept that has the power to deliver huge business benefit of faster results at lower cost per result. By having a fully functional Quad core 64 bit ARM processor on each of the NVMe SSD’s in the server, the SSD’s are not only used to store large amounts of data but can process and analyze data right at the location where the data was stored in the first place. Main CPU, GPU and DRAM are only being used for very high demanding compute tasks as where the secondary functions like search, indexing, pre analytics, encryption etc are processed inside the NVMe SSD. All the SDD’s work together as compute nodes in a distributed compute cluster inside the server chassis.

With Computational Storage overall compute performance and storage capacity per node increases significantly while it requires less equipment, less IO, less power and less floor space.

The result: faster results at lower processing cost.

NGD Systems Computational Storage Devices Now Support Containers for Flexible Application Deployment

Catalina-2 intelligent storage devices now natively support containers, enabling applications to run with near-data processing.

Analytic applications in a variety of edge computing and IoT deployments are highly space-constrained, and cannot support traditional computing platforms,” said Vladimir Alves, Chief Technology Officer at NGD Systems. “By utilizing In-Situ Processing and new form factors such as EDSFF, these applications now have a powerful, high-density, low power computing solution. Our support for Docker extends these capabilities by allowing containerized applications to execute seamlessly within NGD Storage Devices.

Download the Productbrief by filling in your details below.

Error: Contact form not found.

Turbocharging Artificial Intelligence With NGD Systems Computational Storage

We have all seen demonstrations of the capabilities of Artificial Intelligence (AI)-based imaging applications, from facial recognition to computer vision assisted  application platforms. However, scaling these imaging implementations to Petabyte-scale for real-time datasets is problematic because:

application platforms. However, scaling these imaging implementations to Petabyte-scale for real-time datasets is problematic because:

- Traditional databases contain structured tables of symbolic information that is built like a table with each image having a row in the index. The tables are then cross-linked and mapped to added indexes. For performance reasons, these index tables must be maintained in memory.

- Utilizing traditional implementations for managing datasets requires that data be moved from storage to server memory, after which the applications can analyze the data. Since these datasets are growing to Petabyte scale, the ability to analyze completedatasets in a single server’s memory set is all but impossible.

Utilizing brute-force methods on modern imaging databases, which can be in the petabyte-size range, is often both incredibly difficult and enormously expensive. This has forced organizations with extremely large image databases to look for new approaches to image similarity search and more generally to the problem of data storage and analysis.

To read the whole pager, please fill in below details.

Error: Contact form not found.

NGD Systems Delivers Industry-First 16TB NVMe Computational Storage Device in a 2.5” U.2 SSD

The latest addition to the In-Situ Processing family of devices enables petabyte-scale for a variety of important “big data” analytics and IoT applications

NGD Systems, Inc., the leader in computational storage, today announced the general availability (GA) of the 16 terabyte (TB) Catalina-2 U.2 NVMe solid state drive (SSD). The Catalina-2 is the first NVMe SSD with 16TB capacity that also makes available NGD’s powerful “In-Situ Processing” capabilities. The Catalina-2 does this without impact to the reliability, quality of service (QoS) or power consumption, already available in the current shipping NGD Products.

NGD Systems, Inc., the leader in computational storage, today announced the general availability (GA) of the 16 terabyte (TB) Catalina-2 U.2 NVMe solid state drive (SSD). The Catalina-2 is the first NVMe SSD with 16TB capacity that also makes available NGD’s powerful “In-Situ Processing” capabilities. The Catalina-2 does this without impact to the reliability, quality of service (QoS) or power consumption, already available in the current shipping NGD Products.

The use of Arm® multi-core processors in Catalina-2 provides users with a well-understood development environment and the combination of exceptional performance with low power consumption. The Arm-based In-Situ Processing platform allows NGD Systems to pack both high capacity and computational ability into the first 16TB 2.5-inch form factor package on the market. The NGD Catalina-2 U.2 NVMe SSD only consumes 12W (0.75W/TB) of power, compared to the 25W or more used by other NVMe solutions. This provides the highest energy efficiency in the industry.

“With the exponential growth of big data, AI/ML, and IoT, more processing power and storage is required at the edge,” said Neil Werdmuller, director, storage solutions, Arm. “By including multiple Arm cores into its SSDs, NGD Systems delivers the computation required at the edge and the ability to scale processing in step with storage growth. This is achieved within a very tight power budget and without adding expensive additional processors, memory or GPUs.”

NGD Systems’ 16TB Catalina-2 creates a paradigm shift that permits large data sets, near real-time analytics, high-density, and low cost to coexist. A single standard 2U server available with 48 hot-swappable U.2 bays can reach 768TB, over three-quarters of a petabyte (PB). The In-Situ SSDs augment the server processing power with the availability of the embedded 64-bit ARM application processors. NGD Systems demonstrated the value of computational storage with over 500x improvement in a 3M vector similarity search at the Open Compute Project (OCP) Summit last month in San Jose, Calif.

“Artificial Intelligence (AI), Machine Learning (ML), and Computer Vision (CV) applications are all restricted by today’s traditional storage technology,” said Eli Tiomkin, Vice President of Business Development, NGD Systems. “The NGD Systems In-Situ Processing capabilities embedded in the world’s first 16TB U.2 In-Situ SSD allows our customers to deal with data sets in a manner that truly enables AI at the Edge.”

The NGD Systems Catalina-2 U.2 is in production now and is available for immediate deployment. The Catalina-2 U.2 SSD is available in capacities of 4TB, 8TB, and the world’s only 16TB NVMe Computational Storage product.

About NGD Systems

Founded in 2013 with its headquarters in Irvine, Calif., NGD Systems (http://www.ngdsystems.com) is a venture-funded company focused on creation of new category of storage devices that brings computation to data. NGD has designed its advanced proprietary NVMe controller technology which deploys patented Elastic FTL algorithm and Advanced LDPC Engines to provide industry leading capacity and scalability. The platform also deploys the patented In-Situ Processing technology to enable Computational Storage capability. The company is led by an executive team that helped drive and shape the flash storage industry, with decades in leadership positions with storage companies such as Western Digital, STEC, Memtech, and Micron.

Join us on the ISC2018 Frankfurt – June 24-28

|

Closing the Storage Compute Gap

The advent of high-performance, high-capacity flash storage has changed the dynamics of the storage-compute relationship. Today, a handful of NVMe flash devices can easily saturate the PCIe bus complex of most servers. To address this mismatch, a new paradigm is required that moves computing capabilities closer to the data. This concept, which is known as “In-Situ Processing”, provides storage platforms with significant compute capabilities, reducing the computing demands on servers. For applications with large data stores and significant search, indexing, or pattern matching workloads,

In-Situ Processing offers much quicker results than the traditional scenario of moving data into memory and having the CPU scan these large data stores. In-Situ Processing can also enable existing applications to scale to much greater levels than is currently possible with discrete storage and computing resources. Because computation capabilities scale linearly as storage is added into compute nodes, In-Situ Processing can enable new classes of applications for enterprises and cloud service providers.

At NGD Systems, we are blazing the trail of in- situ processing for storage devices. Our in-situ storage architecture and Catalina products make the deployment of many large dataset applications possible and practical, whether from an access time, power/cooling, or real estate standpoint. If you would like to find out more on how you can benefit, please contact us for further discussions on how In-Situ Processing can help solve your data center and business issues.