NGD Systems Computational Storage Devices Now Support Containers for Flexible Application Deployment

Catalina-2 intelligent storage devices now natively support containers, enabling applications to run with near-data processing.

Analytic applications in a variety of edge computing and IoT deployments are highly space-constrained, and cannot support traditional computing platforms,” said Vladimir Alves, Chief Technology Officer at NGD Systems. “By utilizing In-Situ Processing and new form factors such as EDSFF, these applications now have a powerful, high-density, low power computing solution. Our support for Docker extends these capabilities by allowing containerized applications to execute seamlessly within NGD Storage Devices.

Download the Productbrief by filling in your details below.

Error: Contact form not found.

Turbocharging Artificial Intelligence With NGD Systems Computational Storage

We have all seen demonstrations of the capabilities of Artificial Intelligence (AI)-based imaging applications, from facial recognition to computer vision assisted  application platforms. However, scaling these imaging implementations to Petabyte-scale for real-time datasets is problematic because:

application platforms. However, scaling these imaging implementations to Petabyte-scale for real-time datasets is problematic because:

- Traditional databases contain structured tables of symbolic information that is built like a table with each image having a row in the index. The tables are then cross-linked and mapped to added indexes. For performance reasons, these index tables must be maintained in memory.

- Utilizing traditional implementations for managing datasets requires that data be moved from storage to server memory, after which the applications can analyze the data. Since these datasets are growing to Petabyte scale, the ability to analyze completedatasets in a single server’s memory set is all but impossible.

Utilizing brute-force methods on modern imaging databases, which can be in the petabyte-size range, is often both incredibly difficult and enormously expensive. This has forced organizations with extremely large image databases to look for new approaches to image similarity search and more generally to the problem of data storage and analysis.

To read the whole pager, please fill in below details.

Error: Contact form not found.

NGD Systems Delivers Industry-First 16TB NVMe Computational Storage Device in a 2.5” U.2 SSD

The latest addition to the In-Situ Processing family of devices enables petabyte-scale for a variety of important “big data” analytics and IoT applications

NGD Systems, Inc., the leader in computational storage, today announced the general availability (GA) of the 16 terabyte (TB) Catalina-2 U.2 NVMe solid state drive (SSD). The Catalina-2 is the first NVMe SSD with 16TB capacity that also makes available NGD’s powerful “In-Situ Processing” capabilities. The Catalina-2 does this without impact to the reliability, quality of service (QoS) or power consumption, already available in the current shipping NGD Products.

NGD Systems, Inc., the leader in computational storage, today announced the general availability (GA) of the 16 terabyte (TB) Catalina-2 U.2 NVMe solid state drive (SSD). The Catalina-2 is the first NVMe SSD with 16TB capacity that also makes available NGD’s powerful “In-Situ Processing” capabilities. The Catalina-2 does this without impact to the reliability, quality of service (QoS) or power consumption, already available in the current shipping NGD Products.

The use of Arm® multi-core processors in Catalina-2 provides users with a well-understood development environment and the combination of exceptional performance with low power consumption. The Arm-based In-Situ Processing platform allows NGD Systems to pack both high capacity and computational ability into the first 16TB 2.5-inch form factor package on the market. The NGD Catalina-2 U.2 NVMe SSD only consumes 12W (0.75W/TB) of power, compared to the 25W or more used by other NVMe solutions. This provides the highest energy efficiency in the industry.

“With the exponential growth of big data, AI/ML, and IoT, more processing power and storage is required at the edge,” said Neil Werdmuller, director, storage solutions, Arm. “By including multiple Arm cores into its SSDs, NGD Systems delivers the computation required at the edge and the ability to scale processing in step with storage growth. This is achieved within a very tight power budget and without adding expensive additional processors, memory or GPUs.”

NGD Systems’ 16TB Catalina-2 creates a paradigm shift that permits large data sets, near real-time analytics, high-density, and low cost to coexist. A single standard 2U server available with 48 hot-swappable U.2 bays can reach 768TB, over three-quarters of a petabyte (PB). The In-Situ SSDs augment the server processing power with the availability of the embedded 64-bit ARM application processors. NGD Systems demonstrated the value of computational storage with over 500x improvement in a 3M vector similarity search at the Open Compute Project (OCP) Summit last month in San Jose, Calif.

“Artificial Intelligence (AI), Machine Learning (ML), and Computer Vision (CV) applications are all restricted by today’s traditional storage technology,” said Eli Tiomkin, Vice President of Business Development, NGD Systems. “The NGD Systems In-Situ Processing capabilities embedded in the world’s first 16TB U.2 In-Situ SSD allows our customers to deal with data sets in a manner that truly enables AI at the Edge.”

The NGD Systems Catalina-2 U.2 is in production now and is available for immediate deployment. The Catalina-2 U.2 SSD is available in capacities of 4TB, 8TB, and the world’s only 16TB NVMe Computational Storage product.

About NGD Systems

Founded in 2013 with its headquarters in Irvine, Calif., NGD Systems (http://www.ngdsystems.com) is a venture-funded company focused on creation of new category of storage devices that brings computation to data. NGD has designed its advanced proprietary NVMe controller technology which deploys patented Elastic FTL algorithm and Advanced LDPC Engines to provide industry leading capacity and scalability. The platform also deploys the patented In-Situ Processing technology to enable Computational Storage capability. The company is led by an executive team that helped drive and shape the flash storage industry, with decades in leadership positions with storage companies such as Western Digital, STEC, Memtech, and Micron.

Join us on the ISC2018 Frankfurt – June 24-28

|

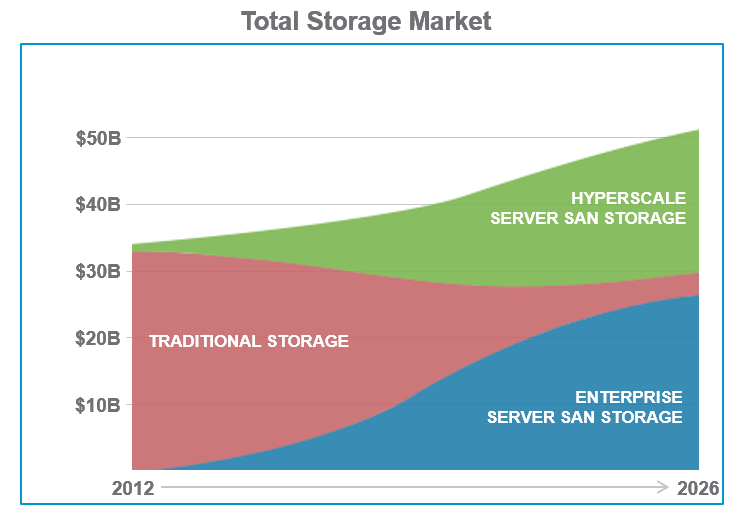

Closing the Storage Compute Gap

The advent of high-performance, high-capacity flash storage has changed the dynamics of the storage-compute relationship. Today, a handful of NVMe flash devices can easily saturate the PCIe bus complex of most servers. To address this mismatch, a new paradigm is required that moves computing capabilities closer to the data. This concept, which is known as “In-Situ Processing”, provides storage platforms with significant compute capabilities, reducing the computing demands on servers. For applications with large data stores and significant search, indexing, or pattern matching workloads,

In-Situ Processing offers much quicker results than the traditional scenario of moving data into memory and having the CPU scan these large data stores. In-Situ Processing can also enable existing applications to scale to much greater levels than is currently possible with discrete storage and computing resources. Because computation capabilities scale linearly as storage is added into compute nodes, In-Situ Processing can enable new classes of applications for enterprises and cloud service providers.

At NGD Systems, we are blazing the trail of in- situ processing for storage devices. Our in-situ storage architecture and Catalina products make the deployment of many large dataset applications possible and practical, whether from an access time, power/cooling, or real estate standpoint. If you would like to find out more on how you can benefit, please contact us for further discussions on how In-Situ Processing can help solve your data center and business issues.